Each summer semester, I teach the lecture “Concepts and Models of Parallel and Data-centric Programming” (abbreviated as PDP) at RWTH Aachen University. The lecture mainly attracts students in the Master programs in Computer Science, Data Science, and some Computational Engineering programs. The decision to shift to online teaching was made about two weeks before the start of the lecture period. However, we had about two weeks of additional time for preparations because we anticipated this move. Since we just received the lecture evaluation of this course, I wanted to use this opportunity to write down some thoughts on my personal experience with my first Online Course.

The moment we realized that the summer semester 2020 would not be a “regular” semester, we started discussions about the format that would be best suited for the expected situation. Here, “we” includes the teaching assistants Simon Schwitanski and Julian Miller, who support me and also Matthias Müller, the head of our institute. Back in March, before the start of the lecture period, we quickly realized that it was hard to predict the developments over the coming weeks and months. But much more importantly, we realized that we had little insight into the situations our students are in, in particular of the international students. In consequence, we decided to maximize the flexibility that we wanted to offer to those students who chose to take the summer semester 2020 seriously. Back then, it was not yet decided that it would become a so-called optional semester in Germany.

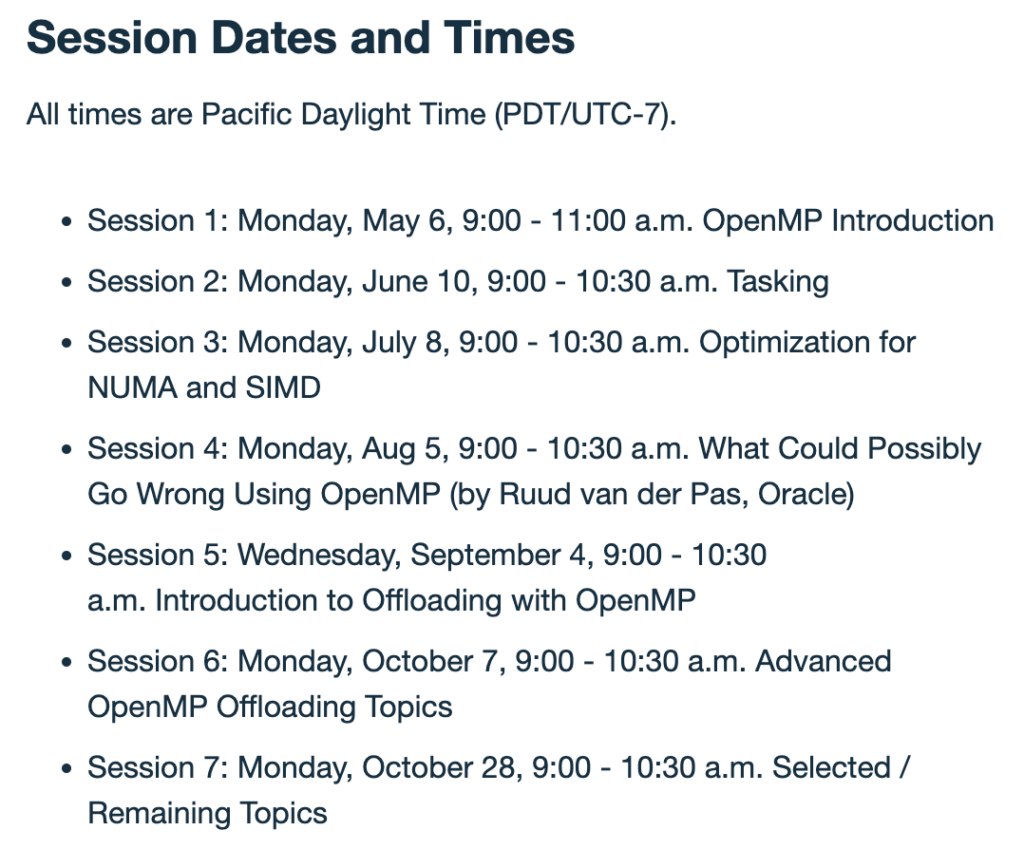

We decided to offer a format that I would call “Flipped Classroom Style”, because we borrowed many aspects of Flipped Classroom (FP) as described in the literature, but did not fully implement it. Similarly to FP, the lectures were recorded, and students were expected to watch these videos ahead of time. The scheduled class time was used to provide a brief review of the past video lectures, to answer students’ questions, and to explore selected topics in more detail. I consider the following details of our offer to be the most important and a good summary:

- The lecture was already well-structured into Foundations and nine Chapters. We decided against giving 90 minutes of lectures as videos. Instead, we split the existing material into pieces of 20 to 40 minutes, producing 55 videos in total. The videos were recorded and provided in the Moodle course room. Students could watch them whenever they wanted, wherever they were, and with whatever effort they were capable of investing. The videos were recorded and produced with open source software (OBS, Kdenlive, Audacity).

- We expected the students to watch approx. 150 minutes of video per week. The content of these videos was covered in the discussion slots (= the scheduled class time) in the following week. Each discussion slot contained an overview of the material that it was intended to cover, giving us the opportunity the emphasize the most important aspects and to express complicated things again in different words. We did these sessions with Zoom.

- For each chapter, we provided a Quiz, which is an element in Moodle. A quiz mostly consists of simple recall and comprehension questions. Some quizzes ask the students to apply their knowledge to a simple task.

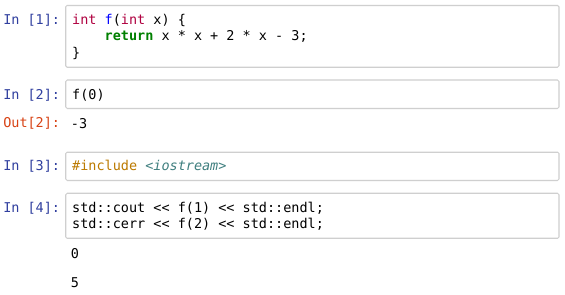

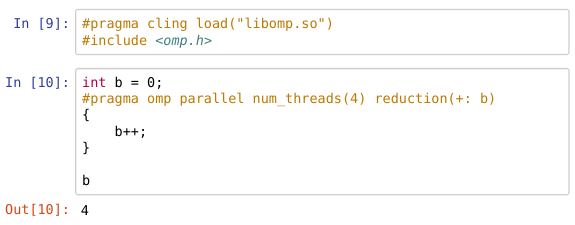

- We offered the students to execute the exercises bare-metal on the HPC system CLAIX at RWTH Aachen University, or in a Jupyter environment that was developed in parallel in a different activity (see my first report on IkapP). Different from the lectures, we presented the solutions to the exercises “live” (that means: in real-time on the real system) via Zoom. Of course, we had slides with instructions, code solutions, answers to theoretical questions, experiment details, and so forth available. We provided these slides in the Moodle course room after the live presentation. There was no video recorded for any exercise.

One remark on the effort. We had about 200 students signed up for the lecture in RWTH’s student management system, about 100 students in the Moodle course room, and again about 100 students signed up for the final exam. Let us assume that giving two lectures per week is a time investment of 180 minutes (speaking) plus 90 minutes (preparation) plus a bit of walking between the office and the lecture room. With the format outlined above, I would roughly estimate a factor 2 of additional effort because the videos have to be produced in advance, and the scheduled class time still required the lecturer’s presence. The preparation of new content is more or less the same (and was done before Corona happened).

I was really curious about the evaluation of the course. In summary, it was very good. The German grades go from 1.0 (the best) to 5.0 (the worst). Students who on average invested two to four hours per week in course preparation and follow-up work gave us (here: me) the following grades: 1.3 for both the Lecture Concept and the Exercise Course, and 1.4 for both the Lecture and Exercise Instruction and Behavior.

This year, the questionnaire contained a few new questions with a focus on the Digital Teaching aspect. I believe these questions were asked in the wrong manner to get meaningful insight, but they served the case to validate that the students were paying attention when answering the questionnaire. Here is just an excerpt:

- Q: My enthusiasm to get involved with the contents of the course increased thanks to the digital teaching materials. A: no. My comment: well, I would not have expected any increase.

- Q: The interaction between students and lecturers is better than with face-to-face teaching. A: no. My comment: In case of yes I would have been disappointed.

- Q: The interaction between students is better than in face-to-face teaching. A: certainly not. My comment: in case of yes I would have been disappointed.

- Q: I prefer face-to-face teaching to digital teaching/courses. A: no. My comment: I hope that students develop a preference for Blended Learning.

What did students say in a free-text form about the advantages of digital teaching for this course? Here are just the most common answers, which means they were given by multiple students independently:

- Better health (more sleep, no Covid)

- Lectures /exercises can be paused or re-watched

- Less effort to participate, no travel times to the lecture/exercise

- Less barrier to ask questions

To be honest, the last point triggered me to think about the lecture and to write this article. Because according to our subjective impression, we received significantly fewer questions in the lecture and the exercise than in previous instances of the course. Why is that? Today, we got a good answer confirmed by three students: students ask fewer questions because videos simplify to reassess the lecture content.

What did students say in a free-text form what they particularly liked about this course? Many of them put answers about digital teaching into this field. Here is a digital teaching-related excerpt, again with a focus on the points that were brought forward by multiple students:

- High quality of the provided digital materials, very well-defined structure, self-contained and clear explanations for students

- Concept of video lectures and weekly Q & A sessions

- Many small videos rather than a few big videos

- Great summaries and examples

- Very good and interactive exercises

- The quizzes

- The flipped classroom concept went pretty well

The last point is my summary of the digital teaching concept we applied for this lecture and I believe it worked much better than just switching the lecture session to a video broadcast – in particular for our international students. Meanwhile, we learned that a certain portion of them was not able to return to / stay in Germany (we do not have precise numbers, unluckily), which proved our assumption of what would be best for the students true.

For the next instance of this lecture, I would like to improve the videos. While I hope to be able to teach in a classroom again, I believe that proving some videos would be beneficial, given the feedback of the students. For instance, we could provide videos for those aspects that turned out to deliver the least points in the exam. I am currently preparing the transcription of the videos (probably Microsoft Azure Cognitive Services) to make the explanations a bit more cohesive. And we are thinking about how to address the two points of critics that we received:

- Not uploading exercise videos (students should participate online because they saw the advantage and benefit of doing so. And not because they otherwise miss the presentations and explanations of the teacher. For example, I was one time not able to participate in the exercise lecture because I had got an exam from last semester postponed because of Corona). – I understand that we are not consistent here, but I explained our motivation for the exercise format above. We will think about that.

- It would have been better if we could actually have a view of our professor moving. It is quite boring to never see him. – Already for the coming winter semester, we are planning for improvement of the video setup that would allow us to record a separate picture of the lecturer. This was not practical in the home-office situation.