During last week’s SC24 conference in Atlanta, GA, I briefly reported on the activity of the Affinity subcommittee of the OpenMP language committee. One topic was that, together with the Tasking subcommittee, we brought support for taskloop affinity to OpenMP 6.0, which I am going to describe here.

As you are probably well aware, the OpenMP specification currently allows for the use of the depend and affinity clauses on task constructs. The depend clause provides a mechanism for expressing data dependencies among tasks, and the affinity clause functions as a hint to guide the OpenMP runtime where to execute the tasks, preferably close to the data items specified in the clause. However, this functionality was not made available when the taskloop construct was added, which parallelizes a loop by creating a set of tasks, where each task typically handles one or more iterations of the loop. Specifically, the depend clause could not be used to express dependencies, either between tasks within a taskloop or between tasks generated by a taskloop and other tasks, limiting its applicability.

OpenMP 6.0 introduced the task_iteration directive, which, when used with a taskloop construct, allows for fine-grained control over the creation and properties of individual tasks within the loop. Each task_iteration directive within a taskloop signals the creation of a new task with corresponding properties. With this functionality, one can express:

- Dependencies: The

dependclause on atask_iterationdirective allows to specify data dependencies between tasks generated by thetaskloopas well between tasks of thistaskloopand other tasks (standalone and e.g. generated by othertaskloops). - Affinity: The

affinityclause can be used to specify data affinity for individual tasks. This enables optimizing data locality and improving cache utilization. - Conditions: The

ifclause can be used to conditionally generate tasks within thetaskloop. This can be helpful for situations where not all iterations of the loop need to generate a dependency, in particular to reduce overhead.

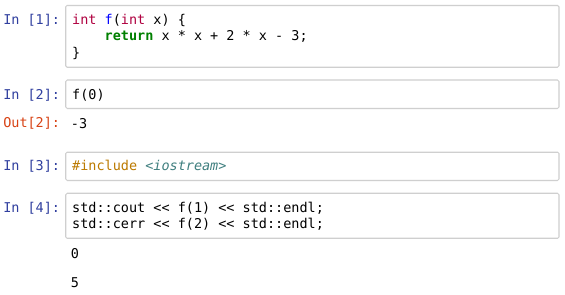

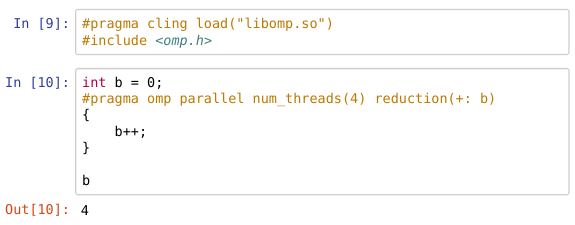

Let’s consider the following artificial example code.

// TL1 taskloop

#pragma omp taskloop nogroup

for (int i = 1; i < n; i++)

{

#pragma omp task_iteration depend(inout: A[i]) depend(in: A[i-1])

A[i] += A[i] * A[i-1];

}// TL2 taskloop + grainsize \

#pragma omp taskloop grainsize(strict: 4) nogroup

for (int i = 1; i < n; i++)

{

#pragma omp task_iteration depend(inout: A[i]) depend(in: A[i-4])

if ((i % 4) == 0 || i == n-1)

A[i] += A[i] * A[i-1];

}// T3 other task

#pragma omp task depend(in: A[n-1])

The first taskloop TL1 construct parallelizes a loop that has an obvious dependency: every iteration i depends on the previous iteration i-1. This is expressed with the depend clause accordingly. Consequently, this will manifest in dependencies between tasks generated by this taskloop.

The second taskloop TL2 parallelized the loop by creating tasks that each execute four iterations, because of the grainsize clause with the strict modifier. In addition, a task dependency is only created if the expression of the if clause evaluates to true, limiting the overall number of dependencies per task

The remaining standalone task T3 is a regular explicit task that depends on the final element of array A, that is produced by the last task of TL2, and hence ensures the completion of all previously generated tasks.